When looking for an answer to a completely different topic, my search returned a few posts regarding lot testing. There were comments and questions such as how many shots should be taken and will just 5 or 10 shots give any meaningful information. So I pulled out one of my old tools to see for myself.

Before I retired, I spent many years where my job required me to use statistics to validate both performance of systems and to validate that improvements made to a system were meaningful (significant). The (Excel) tools I created to perform these tasks were validated by statisticians who were university professors. Although I am fairly versed in statistical analysis, I am not a statistician myself. So, if there are any statisticians around that will validate my conclusions related to their use in lot testing, that would be great. Anyway...

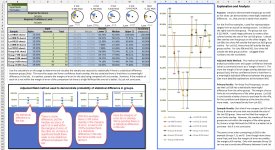

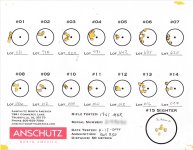

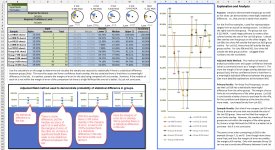

The image I've attached is a screenshot of my analysis workbook where I've made comparisons between lots. The first five are actual test shots provided by Anschutz North America. The other five, I provided as additional examples. I purchased one of the lots (Lot 010). At the time, I purchased it because it had the smallest group of the fourteen lots they tested. But the question I wanted answered now is, are five shot groups adequate for demonstrating that one group is conclusively (statistically) better than another? Yes, Lot 010 is conclusively better than the other lots - even though only five shots were taken for comparison. That said, it is most certainly better if more shots are taken. I don't want to imply otherwise. The margin of error will be smaller as the number of shots taken are increased, so (for numerous reasons) the more shots the better. But groups of as low as five shots can be statistically meaningful.

If someone would like a copy of the Excel workbook used, I will be happy to provide it.

So, please take a gander and let me know what you think.

Before I retired, I spent many years where my job required me to use statistics to validate both performance of systems and to validate that improvements made to a system were meaningful (significant). The (Excel) tools I created to perform these tasks were validated by statisticians who were university professors. Although I am fairly versed in statistical analysis, I am not a statistician myself. So, if there are any statisticians around that will validate my conclusions related to their use in lot testing, that would be great. Anyway...

The image I've attached is a screenshot of my analysis workbook where I've made comparisons between lots. The first five are actual test shots provided by Anschutz North America. The other five, I provided as additional examples. I purchased one of the lots (Lot 010). At the time, I purchased it because it had the smallest group of the fourteen lots they tested. But the question I wanted answered now is, are five shot groups adequate for demonstrating that one group is conclusively (statistically) better than another? Yes, Lot 010 is conclusively better than the other lots - even though only five shots were taken for comparison. That said, it is most certainly better if more shots are taken. I don't want to imply otherwise. The margin of error will be smaller as the number of shots taken are increased, so (for numerous reasons) the more shots the better. But groups of as low as five shots can be statistically meaningful.

If someone would like a copy of the Excel workbook used, I will be happy to provide it.

So, please take a gander and let me know what you think.