How does the Glass compare to ZCO/Tangent?You can read an entire dollar bill at 20 yards on max power

Join the Hide community

Get access to live stream, lessons, the post exchange, and chat with other snipers.

Register

Download Gravity Ballistics

Get help to accurately calculate and scope your sniper rifle using real shooting data.

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Rifle Scopes Zeiss LRP S3 New First Focal Plane Riflescopes for Long-Range Precision Shooting

- Thread starter Lowlight

- Start date

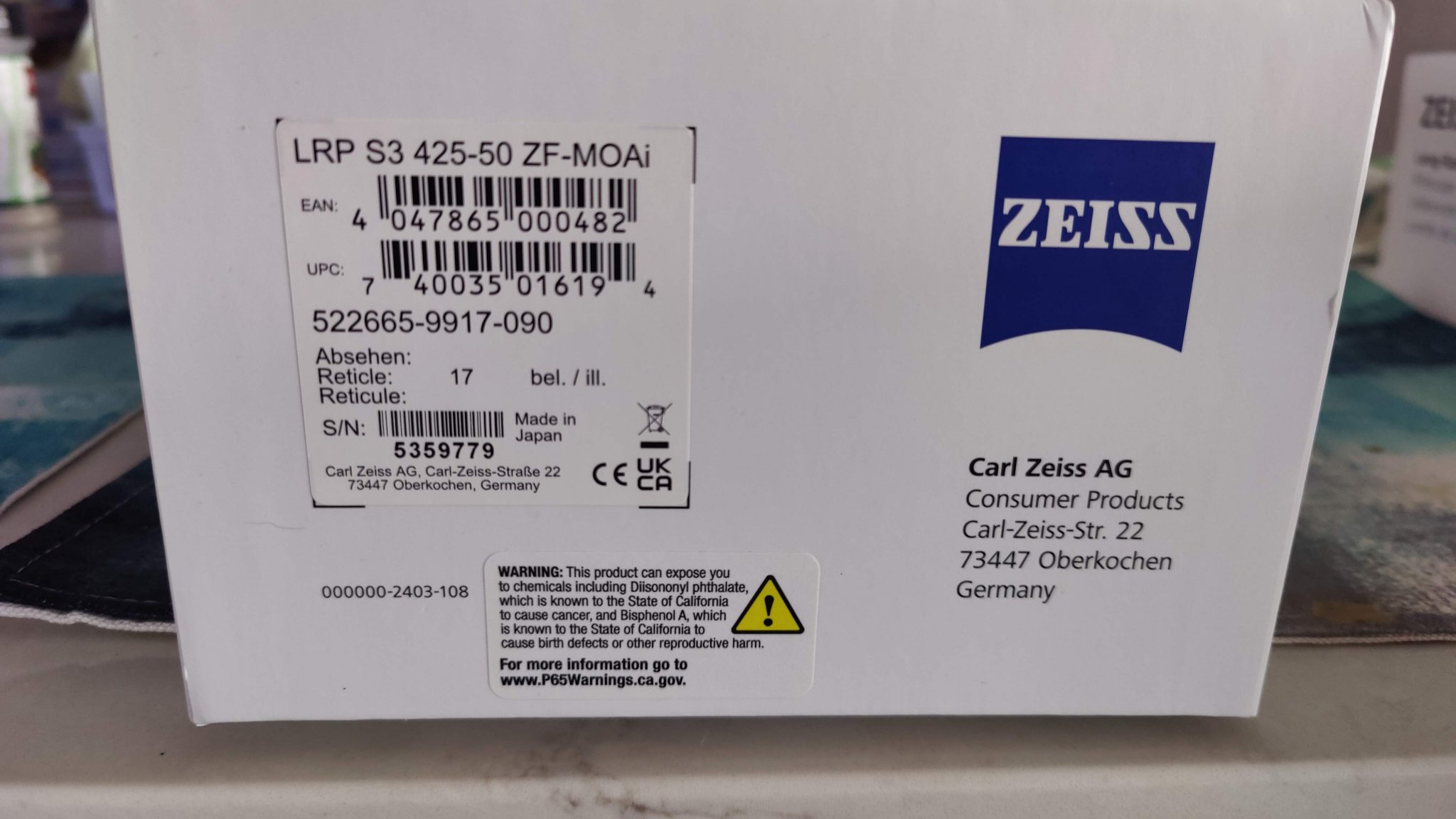

Zeiss has for a very long time sourced some of the lower level products lines.That price point feels so incongruous with Zeiss and their optical quality. Is this a line not made in Europe?

EDIT: made in Japan. So Zeiss is going to try to out-vortex Vortex?

The Conquest and Conquest HD for example, were/are made in Japan for the most part. For the money, its hard to beat the quality especially of their Binos.

Personally I have trouble buying another OEM scope when there are so many similar options out there now. Leica, Vortex, Minox. You can still get a used Minox ZP5 around this price point too or buy a new LR for sub 2k.

I posted a comparison with a ZCO in another thread. We can infer from this how it would compare to a Tangent, as I find the Tangent/ZCO to have similar clarity, with the ZCO have superior contrast/saturation, the Tangent has a brighter image.How does the Glass compare to ZCO/Tangent?

Soo, after hearing today on the podcast the 6-36 had my interest.

Any idea how this stacks up against the Vortex razor G-III 6-36x56 other than the price point?

Any idea how this stacks up against the Vortex razor G-III 6-36x56 other than the price point?

Pictures coming as soon as I canSoo, after hearing today on the podcast the 6-36 had my interest.

Any idea how this stacks up against the Vortex razor G-III 6-36x56 other than the price point?

I have a vudoo barreled action ready to go and was set on the mk5 but I think I will go this route for my rimfire build. So let me hear which magnification route I should go. And when is the release date, must have missed it but I believe October.

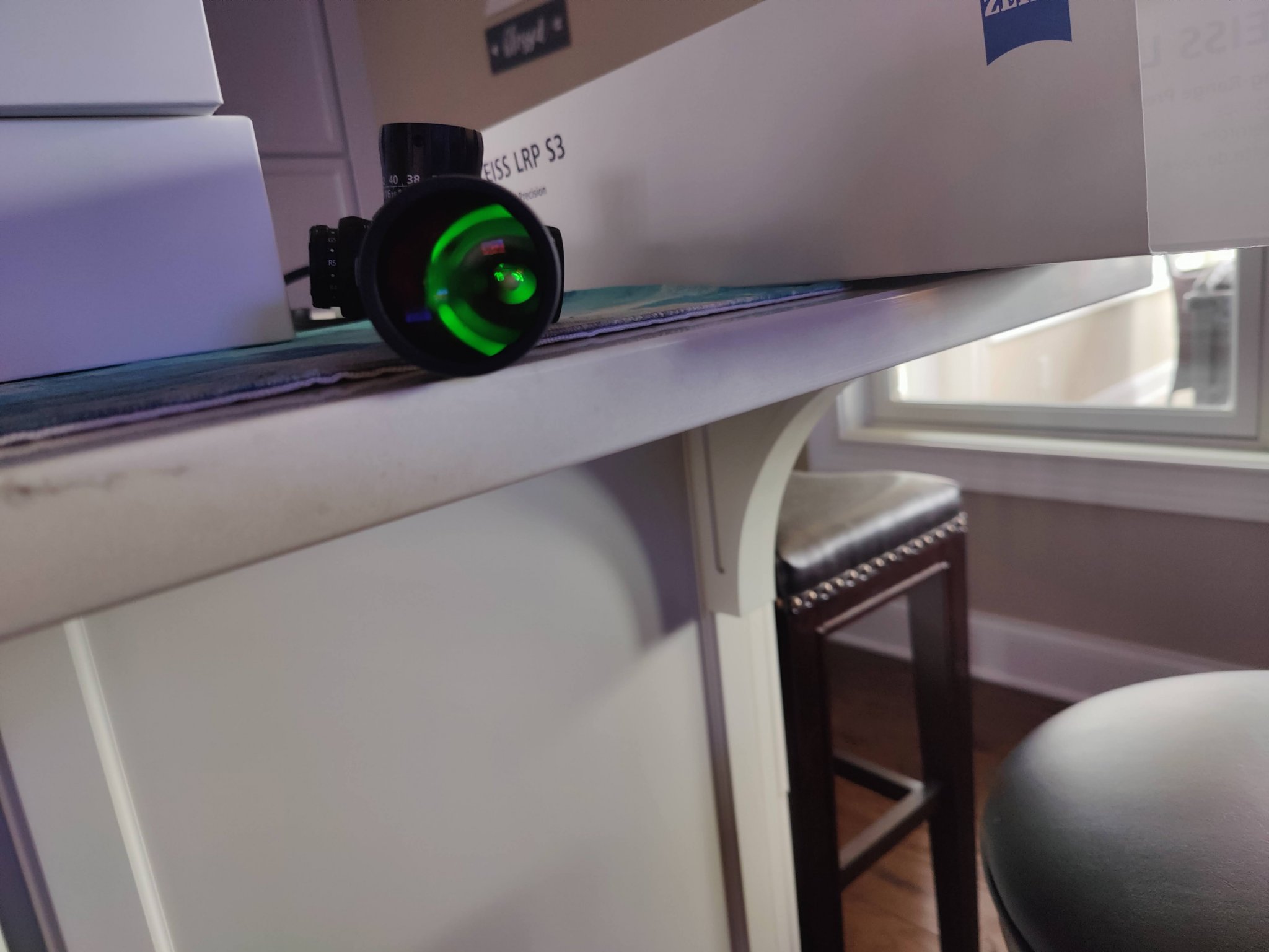

The 4-25 was out in limited numbers on launch day. Reticle illumination is the brightest I've seen in a non-LVPO scope by far. Turrets were nice and audible. Just the right amount of tension so you aren't skipping past numbers. Magnification ring was incredibly smooth and didn't need to throw lever.

We can dial past 500 yards with the 4-25x on our Vudoo. Let me know if that's temptingI have a vudoo barreled action ready to go and was set on the mk5 but I think I will go this route for my rimfire build. So let me hear which magnification route I should go. And when is the release date, must have missed it but I believe October.

ordered mine from MK day after announced …

impatiently waiting curious also is there a approximate timeline?

ordered4-25x

impatiently waiting curious also is there a approximate timeline?

ordered4-25x

Just got first batch in. You ordered with a mount? If so, it's on the shipping table.ordered mine from MK day after announced …

impatiently waiting curious also is there a approximate timeline?

ordered4-25x

Depends on company. Opinion of one, indicative of nothing, many take care of distributors first.

How do you think this scope measures up against the Athlon Cronus and Tract Toric??

Feels better than a Cronus, haven't played with a Toric recently to have an input.How do you think this scope measures up against the Athlon Cronus and Tract Toric??

I just received mine from Mile High. I installed a 60moa base on my Vudoo and I bore sighted it. I’ll zero it at 50 this weekend but with the rough bore sighing I have 38 mils of elevation on the turre.

Out of curiosity, when you dial the scope all the way to max elevation, how much image degradation is there from center?I just received mine from Mile High. I installed a 60moa base on my Vudoo and I bore sighted it. I’ll zero it at 50 this weekend but with the rough bore sighing I have 38 mils of elevation on the turre.

So for an optic snob it might be pretty bad thenIt wasn’t bad. But I’m not an optic snob.

After getting the gun zeroed, I have 42.3 mils of elevation.

@Glassaholic - here's the relevant context: https://www.rokslide.com/forums/threads/zeiss-lrp-s5-3-18x50mm-field-eval.278228/

After the debacle with the LHT 4.5-22 "drop tests" I don't put much credence into most anything over on the slip 'n slide forum these days. I spent hours reading through all the diatribe and at the end of the day concluded the authors and those supporting them had extreme bias toward certain brands of scopes, they treated those who disagreed or questioned their process/findings as "beneath them" and decided they were not worth my time. But just like today's fake news coming out of the major rags, they got their intended job done - to dissuade people from buying certain scopes and steering said people toward their scopes of choice. It's too bad, I think they could have been a much better service to the community and taken constructive criticism instead of acting like children with their feelings hurt.@Glassaholic - here's the relevant context: https://www.rokslide.com/forums/threads/zeiss-lrp-s5-3-18x50mm-field-eval.278228/

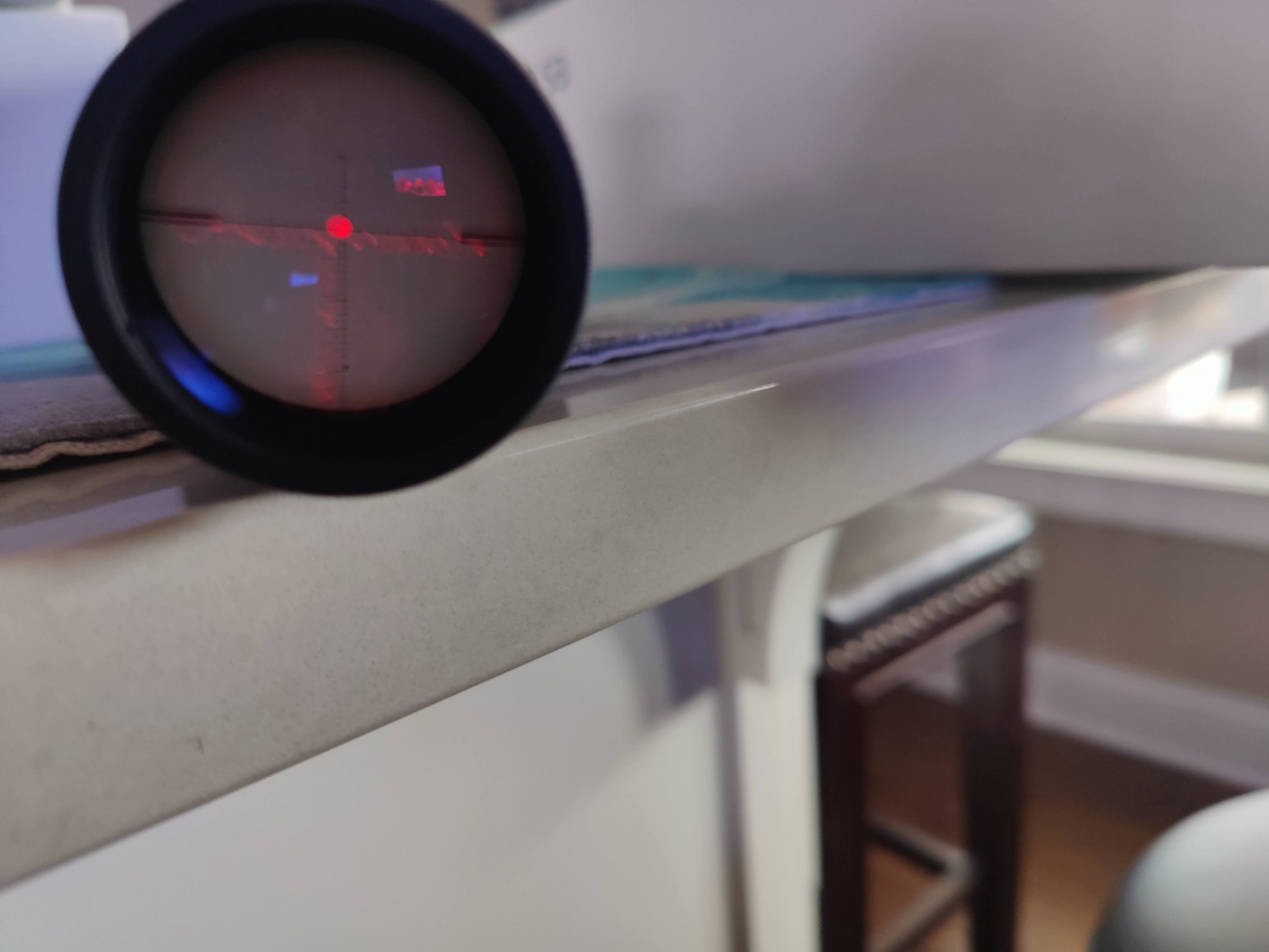

Yesterday I received my S3 425-50. Mine is very defective and I have not mounted it. I talked to Joseph Israel with Zeiss and he has sent a prepaid return label for a replacement. Mine will not focus at 100 yards. The illumination is messed up; it has 5 green and red settings, G1 is red and R5 is green. With red illumination on the lowest setting the red bleeds out on the cross hairs and you can't make out the numbers or hash marks. Green isn't as bad. See photo showing R5 setting with green illumination. The other photo is R1 setting showing bleed out on the cross hairs.

I'm not dissing Zeiss, if I was I'd be returning it for a refund. Instead I'm suggesting things to look at before anyone mounts their scope.

I'm not dissing Zeiss, if I was I'd be returning it for a refund. Instead I'm suggesting things to look at before anyone mounts their scope.

Hi Bill,After the debacle with the LHT 4.5-22 "drop tests" I don't put much credence into most anything over on the slip 'n slide forum these days. I spent hours reading through all the diatribe and at the end of the day concluded the authors and those supporting them had extreme bias toward certain brands of scopes, they treated those who disagreed or questioned their process/findings as "beneath them" and decided they were not worth my time. But just like today's fake news coming out of the major rags, they got their intended job done - to dissuade people from buying certain scopes and steering said people toward their scopes of choice. It's too bad, I think they could have been a much better service to the community and taken constructive criticism instead of acting like children with their feelings hurt.

I must admit, I don't get too caught up in the to-and-fro after the tests happen ... but my understanding is that the whole point of those tests is to have something relatively repeatable, which tests the most important aspect of a scope (reliability, not glass) - and let the chips fall where they may.

The tester has said repeatedly that they're not 'pro' or 'anti' any brand, and would love to see all scopes do well ... it's just that many, including some by 'big' names, simply fail.

Interestingly, his results parallel Mark and Frank's findings pretty closely, brand-wise - now, sadly not longer published.

I would have thought all of these kinds of reference points were of relevance to all of us ... but most reviews continue to focus on things like glass and features, with reliability often extending to repeated dialling, but not impact testing.

This has already been discussed ad nauseum in other threads and I do not wish to stir those waters again, if someone wants to they can find them I'm sure especially as it relates to the LHT 4.5-22, that having been said, one of the issues that was addressed by ILya and others (myself included) was the "repeatable" part, the way they perform their drop testing is not a reliable repeatable method.Hi Bill,

I must admit, I don't get too caught up in the to-and-fro after the tests happen ... but my understanding is that the whole point of those tests is to have something relatively repeatable,

I agree that it would be nice to have a non-biased method of testing and as you say "let the chips fall where they may" but getting a reliable/repeatable method is the challenge. Yes, the authors over at Rokslide tried their best to find a method they thought would be just that, but when criticized they were not the most civil in their response, they were not open to criticism and insisted their method was the best way and felt that proved their findings as conclusive.which tests the most important aspect of a scope (reliability, not glass) - and let the chips fall where they may.

Right, just like Joe Biden says he wants the best for America. People can say whatever they'd like, but actions speak louder than words and in other threads it was discovered that the authors did in fact have a history of bias toward certain manufacturers. I try to give people the benefit of the doubt and hope they are not being disingenuous in their reporting and I'd like to think that might be the case here, but again, their lack of willingness to accept constructive criticism somewhat discredits their findings IMO.The tester has said repeatedly that they're not 'pro' or 'anti' any brand, and would love to see all scopes do well ...

I have no doubt, but why did they fail is the question, did they fail because of something external to the scope itself - was it mounted improperly, were you using different rings/mounts, when you dropped scope A was the angle slightly different from when you dropped scope B which put additional stress, was the ground harder when you tested scope A vs. scope B and so forth. I commend them on their details regarding how they perform their drop testing, but how repeatable is that test between different scopes? What was the sample size, was it one specific scope? We all know that every manufacturer is capable of producing a dud from time to time, even the most well renown manufacturers can have things "slip through the cracks" as they say. There have been a number or real world reports of the various scopes in question, accidents, falls, bangs and bouncing around in vehicles and those scopes have held zero for those users, how do you explain that?it's just that many, including some by 'big' names, simply fail.

I think it is unfair to rate a "brand" based on certain scopes findings, each scope or maybe each model line should be rated separately, comparing a brands "cheap" scope to another brands "top" scope would be somewhat of an unfair comparison. All scopes will fail given certain circumstances and I will agree that some scopes may be more prone to this than others, but we need a much larger sample size to really determine if that is the case. I think what Mark and Frank did with tracking was "better" with regard to repeatable, but the rug got pulled out from under them before they could really get started, not much was said regarding that but I can only imagine certain manufacturers put some legal pressure which shut that door real fast.Interestingly, his results parallel Mark and Frank's findings pretty closely, brand-wise - now, sadly not longer published.

They are definitely relevant when done right, I have my concerns about the drop testing methodology and the unseen motives that may be behind that. If a manufacturer makes a faulty product, by all means the community should be made aware, but declaring a product as faulty when your methodology may be at fault can cause quite an issue (I think the fallout from those drop tests is a clear example of this).I would have thought all of these kinds of reference points were of relevance to all of us ...

Because these are easier to quantify than informal impact testing or reliability in the field. I used to try to provide tracking testing with scopes but found that my small samples may be different from other experiences, a scope that tracks perfectly for me is a sample of one in my own environment, you could have the same model scope and have it not track at all. I suppose the same could be said for the other items we tend to test, and I've started including "sample variation" comments in my testing, one clear example of this was the first Nightforce NX8 2.5-20 scope I had, the optical performance was pretty poor which made the overall experience somewhat frustrating, fast forward a few years and a friends sends me his newer NX8 and I have almost the complete opposite experience with that scope - the optical performance was excellent. Was the first scope a dud that somehow passed through QC, were all scopes duds in that first run from NF and after extensive feedback on the optical aberrations did NF decide to "fix" the issue? I have experienced this with other brands as well and am convinced that some manufacturers quietly address early issues with slight tweaks and fixes.but most reviews continue to focus on things like glass and features, with reliability often extending to repeated dialling,

Because how can you truly make this repeatable given all the nuances that could affect outcomes? Yes, it would be nice to have some type of independent testing but how do you guarantee your testing methodology is perfectly repeatable for all scopes without highly specialized equipment and environment.but not impact testing.

Well, I said at the beginning that I didn't want to go down this rabbit trail again and here I am going down the trail...

Are you talking about these results?Interestingly, his results parallel Mark and Frank's findings pretty closely, brand-wise - now, sadly not longer published.

Scope Tracking Test Results 2020

Follow Sniper's Hide and pick up the latest information on all things precision rifle related. Sniper's Hide Training is for the serious tactical marksman.

www.snipershide.com

www.snipershide.com

With PST and higher scopes, I’m not sure those results bolster your argument re: Vortex.

At all.

My 636 is in the mail from Doug.

I will check the scope out before mounting!

Thanks for the update.

-Richard

I will check the scope out before mounting!

Thanks for the update.

-Richard

Hopefully you will get a good one. I believe I just unfortunate.My 636 is in the mail from Doug.

I will check the scope out before mounting!

Thanks for the update.

-Richard

Got some time behind one at a match. Like the reticle but the eyebox is kinda tight and there is a good amount of CA. I would rather find a used ZP5.

PLEASE keep this thread about the LRP S3...

Please keep that drop test BS off of this thread

Please keep that drop test BS off of this thread

The Sniper's Hide tracking test results is great...The drop test is about an S3

The Rokslide Drop Test not so much...

I agree tracking test is great.The Sniper's Hide tracking test results is great...

The Rokslide Drop Test not so much...

Drop test is being held to a higher standard than other tests. Take all tests with a grain(s) of salt but censoring it is folly.

LMAO if zeiss would have drop tested some of those huge turrets...PLEASE keep this thread about the LRP S3...

Please keep that drop test BS off of this thread

maybe they would have better understood why lo-profile turrets on a rifle are good idea?

Also, the industry has tried to supress all of this information. The shoot the messenger BS could just as easily be aimed at marc and frank. If you have followed this discussion, you would know how this plays out (because it already happened).The Sniper's Hide tracking test results is great...

The Rokslide Drop Test not so much...

If this impact testing was done in a controlled environment (Independent Testing Lab), where the scopes being tested are in a locked apparatus, and are installed in the SAME action/mount/rings…and then impacted with the EXACT same amount of force in the EXACT same areas of the scope…then and only then will I give any credit to an impact test…

Jim Bob dropping a gun on the ground in an uncontrolled manner, and calling that a “test” … is laughable…

Jim Bob dropping a gun on the ground in an uncontrolled manner, and calling that a “test” … is laughable…

Not at all, this is exactly the "actual" risk the optic faces in the field.Jim Bob dropping a gun on the ground in an uncontrolled manner, and calling that a “test” … is laughable…

Most of the optics companies I have been to, (4+) all have fixtures to test this stuff.

They have water tanks, pile drivers, shakers, etc...

This stuff is tested; in fact, whenever you go to an optics company, most of those fixtures are running. In Greeley at "Steiner / Burris," the pile driver shakes the whole place, as it drops and hits the stops.

These aren't any less robust than any other scope

They have water tanks, pile drivers, shakers, etc...

This stuff is tested; in fact, whenever you go to an optics company, most of those fixtures are running. In Greeley at "Steiner / Burris," the pile driver shakes the whole place, as it drops and hits the stops.

These aren't any less robust than any other scope

Thanks for the feedback. Was your experience with the 4-25x50 or 6-36x56 model?Got some time behind one at a match. Like the reticle but the eyebox is kinda tight and there is a good amount of CA. I would rather find a used ZP5.

I've got comparison pics of the 6-36x to put up.

Not much CA that I have seen. I've got the 4-25x comparison up where you can check things out vs other comparables.

Not much CA that I have seen. I've got the 4-25x comparison up where you can check things out vs other comparables.

6-36. Shooting with a squad full of ZCO and Tangents, the IQ difference was noticeable. I didn't hate it but I would 100% get a used ZP5 with MR4 before buying that, or a Gen 3 razor if I couldn't find one.Thanks for the feedback. Was your experience with the 4-25x50 or 6-36x56 model?

My illumination isn’t out of place on the knob, though I thought it was at first due to the offset indicator. Illumination doesn’t seem as bad as your pictures, but pretty much from R2/G2 and up in intensity the rest of the reticle has bleed issues that I’d expect from an EOTech and not a $2.5K class scope. I suspect optical performance at the edge of travel is also compromised (there’s a lot of elevation travel though), but there wasn’t enough time in the daylight left to verify.Yesterday I received my S3 425-50. Mine is very defective and I have not mounted it. I talked to Joseph Israel with Zeiss and he has sent a prepaid return label for a replacement. Mine will not focus at 100 yards. The illumination is messed up; it has 5 green and red settings, G1 is red and R5 is green. With red illumination on the lowest setting the red bleeds out on the cross hairs and you can't make out the numbers or hash marks. Green isn't as bad. See photo showing R5 setting with green illumination. The other photo is R1 setting showing bleed out on the cross hairs.

I'm not dissing Zeiss, if I was I'd be returning it for a refund. Instead I'm suggesting things to look at before anyone mounts their scope.

View attachment 7988005View attachment 7988006View attachment 7988009

That's good. I hope to get mine back soon. Did yours focus correctly with the parallax setting? Mine was blurry.My illumination isn’t out of place on the knob, though I thought it was at first due to the offset indicator. Illumination doesn’t seem as bad as your pictures, but pretty much from R2/G2 and up in intensity the rest of the reticle has bleed issues that I’d expect from an EOTech and not a $2.5K class scope. I suspect optical performance at the edge of travel is also compromised (there’s a lot of elevation travel though), but there wasn’t enough time in the daylight left to verify.

Similar threads

- Replies

- 1

- Views

- 3K

- Replies

- 13

- Views

- 2K

- Replies

- 2

- Views

- 390