Rifle Scopes LPVO or Red dot/magnifier for SBR

- By freedom71

- Observation & Sighting Devices

- 49 Replies

Instead of a red dot + magnifier, why not do a red dot + a 3x prism offset? That eliminates the need to flip your 3x magnifier back and forth.

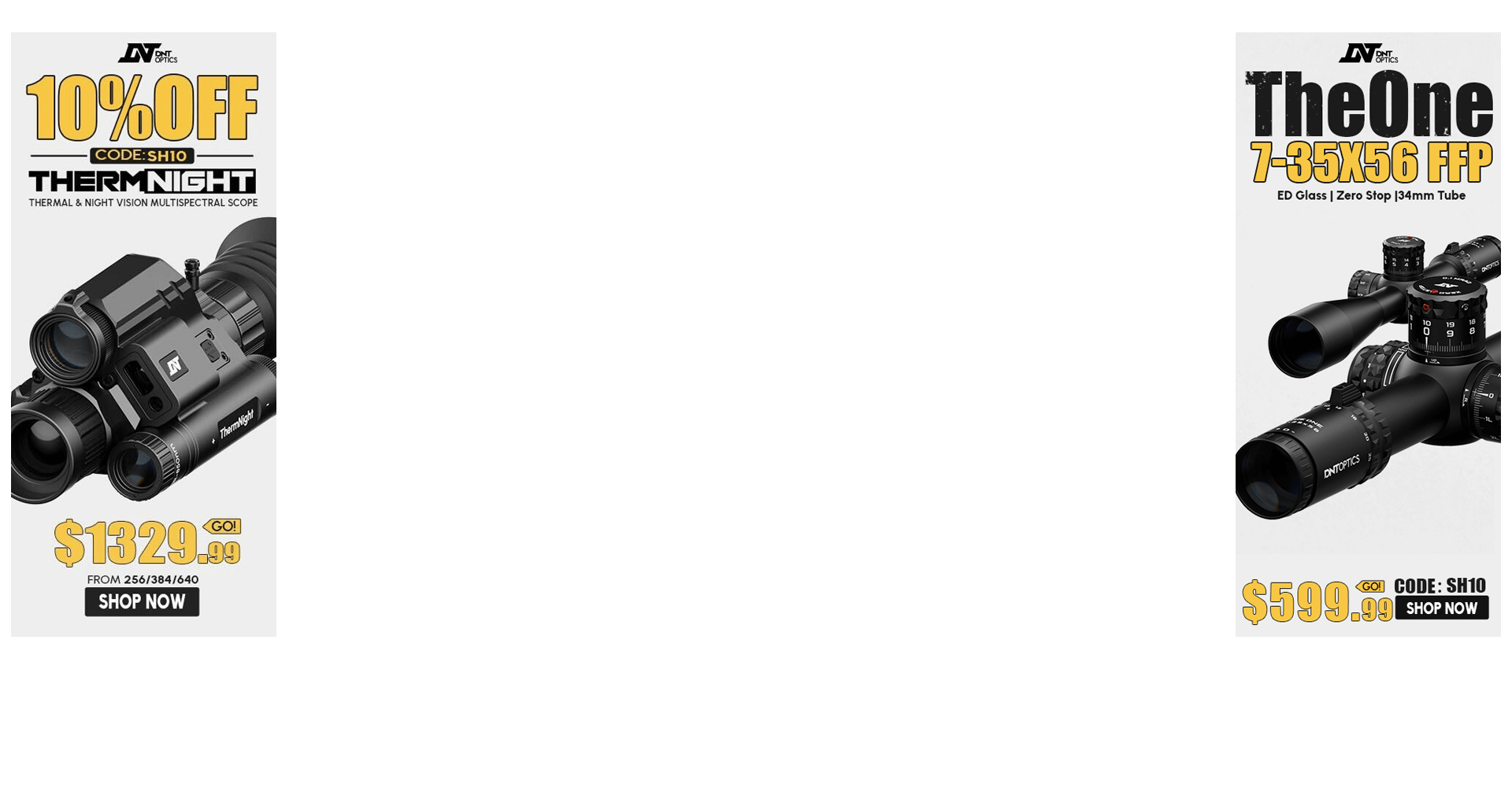

Login to view embedded media

Login to view embedded media